Retrieval Augmented Generation - enriching LLMs with internal company data

As part of this project, an application for Retrieval Augmented Generation (RAG) was developed. The aim of the application is to enrich Large Language Models (LLMs) with company-internal data in order to provide more precise and context-relevant answers to queries. Modern technologies such as Haystack, AWS, and Qdrant were used for this purpose.

RAG: Retrieval Augmented Generation #

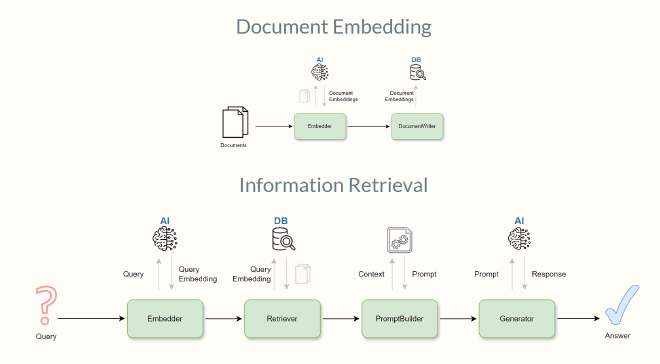

The basis of the project was the development of Retrieval Augmented Generation (RAG), a method in which an LLM is enriched with external information. The aim is to make internal company documents and databases easily accessible and user-friendly. The document content was analyzed, embedded in a vector space and then retrieved by the models to generate informed answers.

Technology stack #

Haystack #

The Haystack framework was used to build the RAG system. It offers easy development as it provides all necessary functions and brings all components together. Haystack was used to build various pipelines to pre-process the documents, split them up and convert them into vector representations using an LLM. In addition, Haystack offers interfaces to vector databases so that these can be easily filled and searched.

Gradio #

To operate the application, an intuitive user interface with Gradio was developed. This allows users to make requests, receive answers and rate questions/answers. The backend for managing the requests and searching the vector database was realized with FastAPI and Uvicorn as an ASGI server.

Qdrant vector database #

In order to be able to search the documents efficiently, the content was stored in an open source Qdrant vector database. The vector database enabled a fast and precise search by saving the embeddings of the documents and making them queryable. It also comes with its own front end, in which the status of the vector database can be checked and the database can be managed.

Large Language Models (LLMs) #

Various LLMs such as Phi and llama3 were used to create the text/document embeddings and generate responses, provided by Ollama. These models were deployed in a Docker container to enable a simple and scalable application.

AWS #

To deploy the application, the entire system was deployed as a Docker Compose (frontend, backend, vector database and LLM) in AWS. The infrastructure enables easy access to powerful GPU resources and easy scalability.

Conclusion #

The developed application shows how Retrieval Augmented Generation can extend the capabilities of LLMs by enriching them with company internal data. By using modern technologies such as Haystack, Gradio, Qdrant, and Docker, a scalable and user-friendly solution was created that supports companies in the automated answering of queries.

Demo #

If you are interested in a customized solution for your company or would like to see the application live in action, I would be happy to offer you a personal demonstration. Please contact me to book an appointment. Together we can prepare your company’s internal data and offer it efficiently to your employees or customers.

Make an appointment now!Activities #

- Development of a RAG in Python

- Implementation of various pipelines with Haystack

- Integration and deployment of various LLMs (Llama, Phi) with Ollama

- Development of a frontend with Gradio, FastAPI and Uvicorn

- Development of a frontend/backend architecture

- Management of documents in a Qdrant vector database

- Deployment of the entire application as Docker-Compose in the AWS